25.07.2024

A day of chaos

After a botched software upgrade, Microsoft’s Blue Friday outage shows the price of putting software and IT infrastructure under the rule of capital, argues Paul Demarty

An old joke: in the university staff room, an argument breaks out about the origins of the universe.

A professor of civil engineering notes that God created the world in six days, which seems like a solid work of engineering; therefore, God is an engineer. A professor of mathematics objects: first of all, God created order out of chaos, and only mathematics creates order out of chaos; therefore God is a mathematician. The head of IT laughs loudly: where do you think the chaos came from?

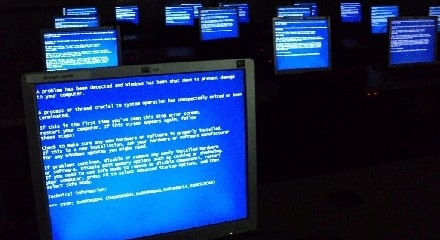

The propensity for computer systems to laugh in the face of the tasks we expect them to do is, by now, legendary. Last week’s cataclysmic outage on July 19, which affected Windows PCs and servers running a specific security software package - CrowdStrike’s Falcon system - rather underlined the matter. This was not, as failures often are, limited to one organisation or company. Indeed, in the scope of its effects, it was similar to cases where one of the major cloud computing providers - Amazon Web Services, say - has suffered a major breakage. A very, very large amount of random stuff just stopped working. Banks could not make transfers. Airports could not get people on flights. Hospitals could not see their schedules. The economic damage caused is estimated at $10 billion.

Donkey work

Though this is not a specialist technical publication, obviously, it is worth getting our hands dirty a little bit and trying to explain what happened.

Most general-purpose computers nowadays run an operating system - for example, Microsoft Windows, or Apple macOS, or some variant of the open-source Linux. The purpose of an operating system is to take care of the donkey work of instructing the computer hardware to do stuff; then programmers can focus on what their application is actually supposed to accomplish (browsing the web, playing a game, or whatever) rather than how exactly data gets written to memory, or how the file system works, or which of a million possible printers you have connected.

In modern operating systems, the functionality that achieves these very low-level interactions with the computer hardware is carefully isolated from other software running on the machine. This core is usually called the kernel. The kernel has its own memory. Applications make the computer do stuff by asking the kernel to do it for them.

Sometimes, however, an application developer wants to have that same lower level of access as the kernel. This could be because sheer speed is of the essence. It could be because, say, you are a malicious hacker who wants to hijack the system! Or it could be because you are an IT security software company, and you want to see if a malicious hacker has in fact hijacked the system.

Enter Windows and CrowdStrike. The latter, in order to provide the level of protection it does, requires kernel access. Bugs running in the kernel, however, are extremely difficult to recover from reliably. So when an update to the Falcon software caused a crash, the consequence was that millions of computers immediately suffered the notorious ‘blue screen of death’, and had to be rebooted - and then crashed immediately again, ad inifinitum. While a fix was, hypothetically speaking, available mere hours later, applying it involved interrupting the system boot process - something most ordinary users would not know how to do; so full recovery for many institutions would take days.1

So whose fault was this? On the face of it, the answer would appear to be CrowdStrike’s. This update was apparently pushed out without being tested. This is a disastrous failure of process, given the consequences of screwing things up in kernel-space. (Anti-malware software has, let us say, a poor reputation among IT professionals for its intrusiveness and threat to reliability, and is tolerated as a necessary evil in the age of Wannacry and Stuxnet.) The company’s CEO apologised.2 Case closed?

Monopoly

Not quite. Long-time users of Windows may have noticed that they have seen a lot less of the ‘blue screen of death’ in recent years. This is no accident: earlier versions of the OS had a rather cavalier attitude to isolating the kernel. This produced endless security nightmares. It should be remembered that Apple’s selling point for their Mac computers for many years was “They just work” - that is, they don’t crash all the time, they’re not nearly as vulnerable to viruses and malware, etc.

Apple had a point. The near-total monopoly of Windows (and before it, MS-DOS) in the PC market, from the late-1980s to the mid-2000s, led to enormous complacency. The fact that the whole OS was proprietary meant that nobody outside the company could discover any problems easily. In that same period, the modern open-source software movement kicked into gear, and the earliest version of Linux was published in 1991. It was one of a family of free operating systems that took inspiration from Unix - an extremely successful OS developed mostly in the 1970s. One other was FreeBSD, on which all Apple’s modern OSes are based.

The open source movement has not produced much in the way of consumer apps that compete with commercial offerings (though nearly all incorporate substantial portions of open-source code), but its effect on the operating system world was immense. Linux and the various BSDs - FreeBSD, OpenBSD and so on - benefited from wide, open collaboration between ferociously talented specialists. Windows did not, and woefully failed to meet the standards that were raised, again and again, by this gift economy of cranky nerds.

After Linux began to dominate in the server market - that is, computers in data centres that run large workloads and typically connect to the internet - and a revived Apple began nibbling away at the PC market, Microsoft was at length shamed into improving the fundamentals of Windows. Yet it is still several steps behind. Both macOS and Linux have developed ways to give applications safer kernel access, with reduced risk of catastrophic failure. Windows is still working on support for, essentially, the Linux version of this - something called eBPF (which we will not go into), but has yet to ship it, and it will initially lack a lot of the features of the Linux implementation. By failing to meet the bar, Microsoft arguably set CrowdStrike up to fail.3

There are two political-economic problems posed here: one is the inherent capitalist tendency towards monopoly; and the other is the organisation of work in this unique industry. Like all monopolies, Microsoft’s dominance over the PC market had the upside essentially of perpetual rent. The coercive laws of competition are put, for long stretches of time, to one side.

Yet the PC operating system monopoly is hardly the most significant in the modern technology industry. Another is the extreme centralisation of the silicon chip market in very few hands. Intel continues to dominate in the PC and server market. Several different chip designs are to be found in modern consumer devices like smartphones (and Apple now uses its own silicon in its PCs); but almost all are manufactured by a single, enormous Taiwanese company, TSMC. This has become a direct political issue in the United States, since its insistence on ever greater brinksmanship with the People’s Republic of China makes TSMC a potentially dangerous chokepoint if things really kick off over the island’s status.

Intel has long been stagnant, and faces challenges in both the PC and server markets. Yet it is Intel that principally benefits from the substantial subsidies created by the US CHIPS Act, which attempted to onshore more semiconductor manufacture. (We should also mention Nvidia, whose products are traditionally used for graphics processing, but are also extremely well suited to modern AI applications - and are also actually manufactured by TSMC.)

Similar concentration has taken place in the server business, with the increasing dominance of a few giant cloud computing companies. Amazon Web Services and Microsoft’s Azure platform battle for dominance, with Google’s Cloud Platform in a distant third place. All of these, notably, are spin-offs of some of the largest existing technology concerns (sometimes called ‘hyperscalers’). This is arguably more of a natural monopoly. Though it is easier to build your own data centre in a particular location than it is to, say, create a competing rail line or sewer network to displace an incumbent, very similar problems result - capacity issues (whether wasteful overprovisioning or unnecessary bottlenecks), vendor lock-in due to very slightly different interfaces to very similar underlying services (somewhat analogous to the proliferation of different railway gauges in the 19th century), and so on.

Because these are private monopolies, meanwhile, they are subject to the same irrational contingencies of the business cycle as other capitalist firms. This is very clearly visible today, with enormous capital investment in new data centres to deal with demand for much-hyped AI applications, which are monstrously power- and water-hungry (for cooling). Is this really wise? So far, the boom in large language models has produced little more than novelty toys (normally slightly broken toys at that). There is no sign that a great breakthrough is coming; these things do tend to come as a surprise, of course, but the only breakthrough worth having would mean making these things more efficient. The main purpose of all this expenditure seems to be merely a vain attempt to restore the kind of ready access to capital the tech industry enjoyed in the low-interest-rate era.

A socialised version of the cloud giants - call it the People’s Cloud Platform, let’s say - would take advantage of the very real economies of scale on offer here, but rationalise capacity planning, and allow for a certain amount of experimentation - the deployment of novel chip architectures and operating systems, for example - that would then be available to all, not just the customers of some particular vendor at a steep mark-up. A democratically planned cloud, in short, would bring some of the same rigour and experimentation we have seen in the open-source movement at its best to a different part of the overall IT picture.

Future

Which leaves us with, precisely, the problem of proprietary software and open source. The response of some on the left - anarchistic techno-utopians, say - has been gleefully enthusiastic, and seen great promise in the open source model for a modern technological economy not based on private property. On the opposite end, some more sceptical Marxists have viewed it as little more than an appendage of the tech giants.

The wonder of the dialectic is that it can be both. It is certainly the case that much of the value of open-source software accrues in profits to major tech firms who use it in commercial offerings, and this is more and more the case. It is also true that, as a result, the population of contributors to open source software has shifted from the old mass of enthusiastic volunteers to engineers being paid deliberately by companies to work on these projects, sometimes on a very large scale. The dominance of certain major individuals in the ecosystem is also a sticking point. Yet it remains a gift economy, albeit one fraying a little at the edges; and in certain critically important areas it has outperformed proprietary equivalents to an astonishing degree. The tech giants have glommed onto it to avoid being left behind.

In that respect, there is some real utopian promise here that should not be dismissed. We could draw the analogy with Marx’s occasional references in Capital to experiments in self-management in factories, or even - more distantly - his musings on the potential future of the mir village commune system in Russia, which might perhaps be transformed directly into a fully collective agricultural economy if revolution were successful in Russia’s urban centres. The point was not that the mir was the form of the future, never mind some particular Owenite scheme, but that they were things that pointed imperfectly to the future and were thus raw material for it in a real sense.

Certainly, the capitalist drive for profit makes large parts of our critical IT infrastructure less reliable, less secure and more wasteful. Who knows how we will make operating systems in the future - but one hopes it will be more like Linux, and less like Windows!

-

CrowdStrike’s own post-mortem has more details: www.crowdstrike.com/blog/falcon-update-for-windows-hosts-technical-details.↩︎

-

www.crowdstrike.com/falcon-content-update-remediation-and-guidance-hub/.↩︎

-

See, for example, the comments of operating systems veteran Brendan Gregg: www.brendangregg.com/blog/2024-07-22/no-more-blue-fridays.html.↩︎